AI meets data protection: our ChatGPT solution for corporate knowledge

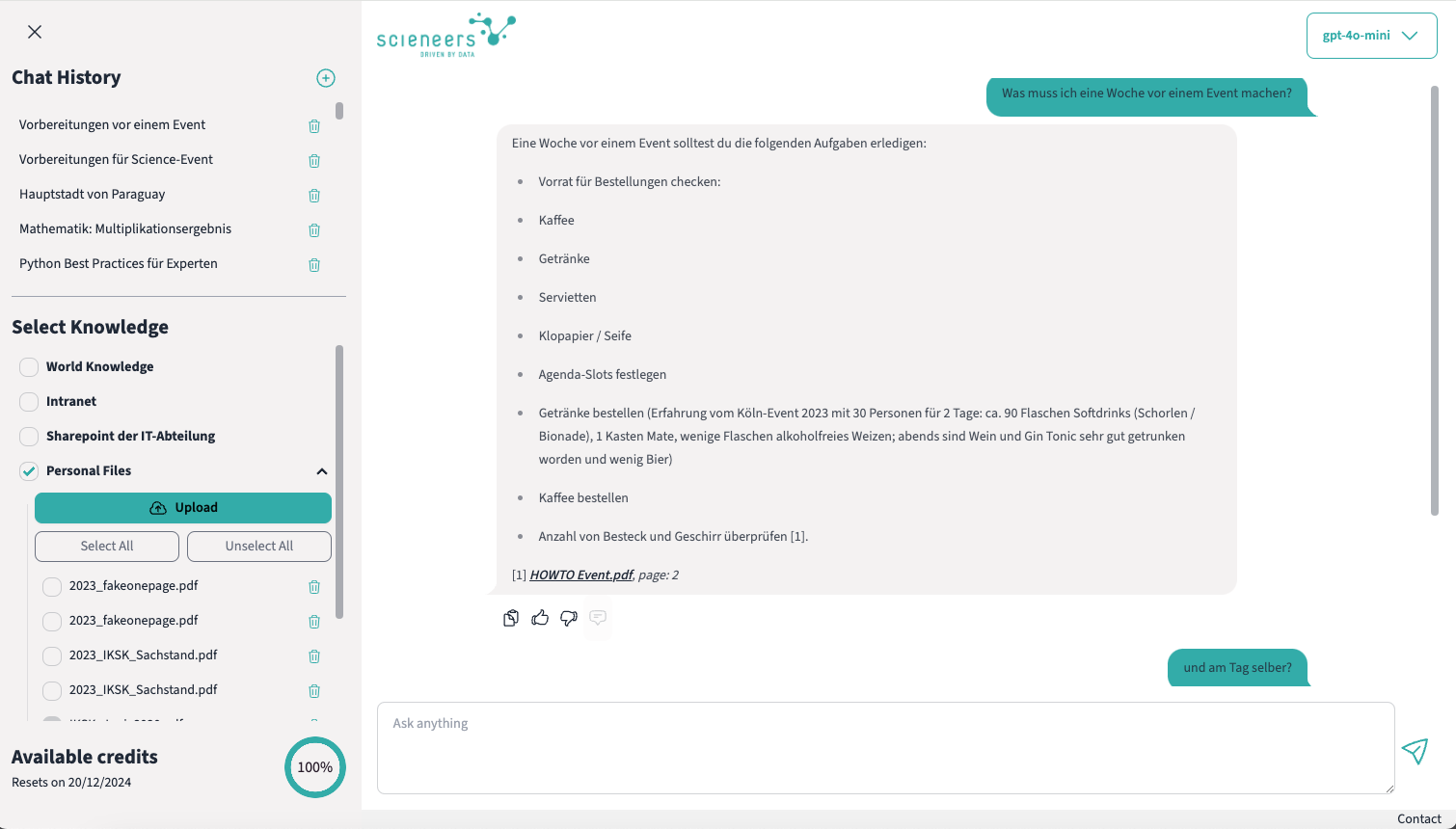

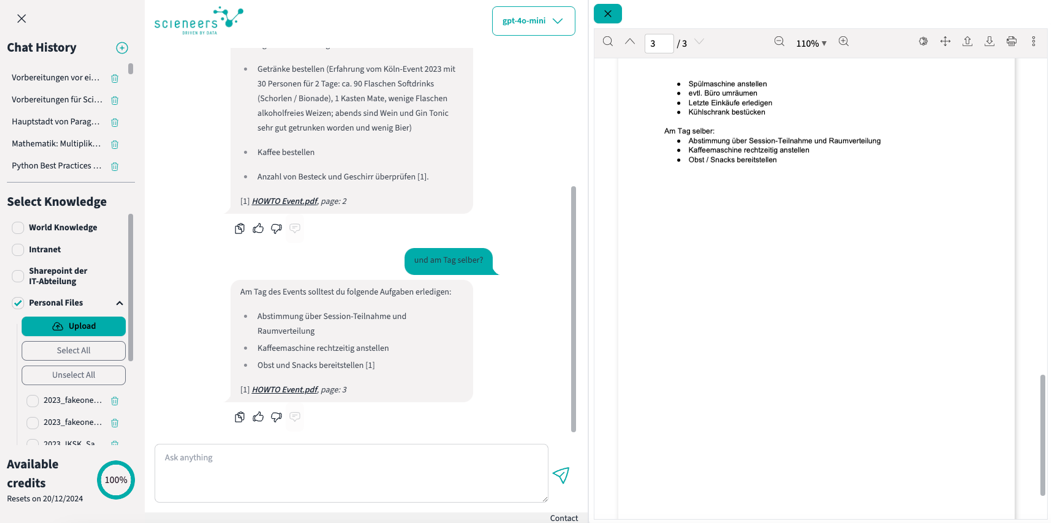

Everyone knows ChatGPT – a chatbot that provides answers to almost any question. But in many companies, its use is not yet officially permitted or is not provided. ChatGPT can be operated completely securely and in compliance with data protection regulations and provide employees with easy access to internal company knowledge. Based on experience from numerous Retrieval Augmented Generation (RAG) projects, we have developed a modular system that is specially tailored to the needs of medium-sized companies and organizations. In this article, we present our lightweight and customizable chatbot, which enables data protection-compliant access to company knowledge.

1. Use your own sources of knowledge

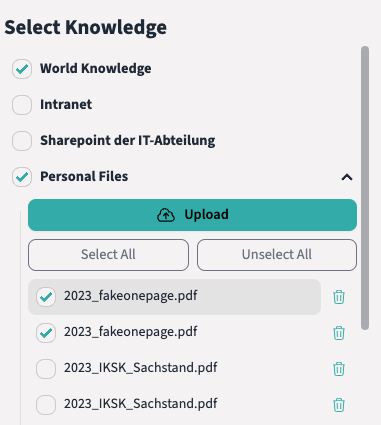

The core of our RAG system is that an LLM can access company-specific knowledge sources. Various data sources can be made available to the chatbot:

1. User-specific documents:

- Employees can upload their own files, e.g. PDF documents, Word files, Excel spreadsheets or even videos. These are processed in the background and are permanently available for chatting after a short time. The processing status can be viewed at any time so that it remains transparent when the content can be used for requests.Example: A sales employee uploads an Excel spreadsheet with price information. The system can then answer questions about the prices of specific products.

- Global internal company knowledge sources:

- The system can access central documents from platforms such as SharePoint, OneDrive or the intranet. This data is accessible to all users. Example: An employee wants to search for the company pension scheme regulations. As the relevant company agreement is accessible, the chatbot can provide the correct answer.

- Group-specific knowledge sources:

- It is also possible that information / documents are only made accessible to specific teams or departments .

- Example: Only the HR team has access to onboarding guidelines. The system can only answer questions from HR employees.

- Individual and group-based budgets: The amount of budget available to individual employees or teams in a specific period is determined. This budget does not necessarily have to be a euro amount, but can also be converted into a virtual currency of your own. Transparency for users: All employees can view their current budget status at any time. The system shows how much of the budget has already been used and how much is still available. If the set limit is reached, the chat pauses automatically until the budget is reset or adjusted.

- Authentication systems: Our solution enables the connection of different authentication methods, including widely used systems such as Microsoft Entra ID (formerly Azure Active Directory). This offers the advantage of seamlessly integrating existing company structures for user management. Access control: Different authorizations can be defined based on user roles, e.g. for access to specific knowledge sources or functions.

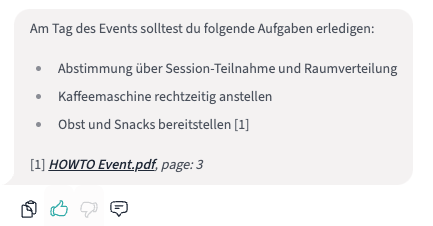

- Chat history: All chats are automatically named and saved. If desired, users can delete chats completely – this also includes permanent removal from the system. Citations: Citations ensure that information remains traceable and verifiable. For complex or business-critical questions in particular, this strengthens credibility and enables users to directly check the accuracy and context of the answers. Each answer from the system contains references to the original document sources, for example with links to the exact page in a PDF or a jump to the original document source.Easy customization of prompts: To control the system responses, prompts can be customized via a user-friendly interface – without any prior technical knowledge.

- Output of different media types: different output formats, such as code blocks or formulas, are displayed accordingly in the responses.

2. Global internal company knowledge sources:

3. Global internal company knowledge sources:

Even if users work with all available data sources, the system ensures in the background that only information relevant to a query is used to generate answers. Intelligent filter mechanisms automatically hide irrelevant content.

Users do, however, have the option of explicitly specifying which knowledge sources should be taken into account. For example, they can choose whether a query should access current quarterly figures or general HR guidelines. This prevents irrelevant or outdated information from being included in the response.

2. feedback process and continuous improvement

A central component of our RAG solution is the ability to systematically collect feedback from users in order to improve the quality of the system by identifying weaknesses. For example, documents with inconsistent formats, such as poorly scanned PDFs or tables with multiple nested levels that are interpreted incorrectly, could be a weak point.

Users can easily provide feedback on any message by using the “thumbs up/down” icons and adding an optional comment. This feedback can either be evaluated manually by admins or processed by automated analyses in order to identify optimization potential in the system.3. budget management – control over usage and costs

The data protection-compliant use of LLMs in connection with proprietary knowledge sources offers companies enormous opportunities, but admittedly also entails costs. Well thought-out budget management helps to use resources fairly and efficiently and to keep an eye on costs.

How does budget management work?

4. Secure authentication – protection for sensitive data

An essential aspect for companies is often secure and flexible authentication. As RAG systems often work with sensitive and confidential information, a well thought-out authentication concept is essential.

5. Flexible user interface

Our current solution combines the most requested front-end features from various projects and thus offers a user interface that can be individually customized. Functions can be hidden or extended as required to meet specific requirements.

Conclusion: Fast start, flexible customization, transparent control

Our chatbot solution is based on the experience gained from numerous projects and enables companies to use language models in a targeted and data protection-compliant manner. Specific internal knowledge sources such as SharePoint, OneDrive or individual documents can be integrated efficiently.

Thanks to a flexible code base, the system can be quickly adapted to different use cases. Functions such as feedback integration, budget management and secure authentication ensure that companies retain control at all times – over sensitive data and costs. The system therefore not only offers a practical solution for dealing with company knowledge, but also the necessary transparency and security for sustainable use.

Small highlighted action box: Are you curious? Then we would be happy to show you our system in a live demo in a personal meeting and answer your questions. Just write to us!